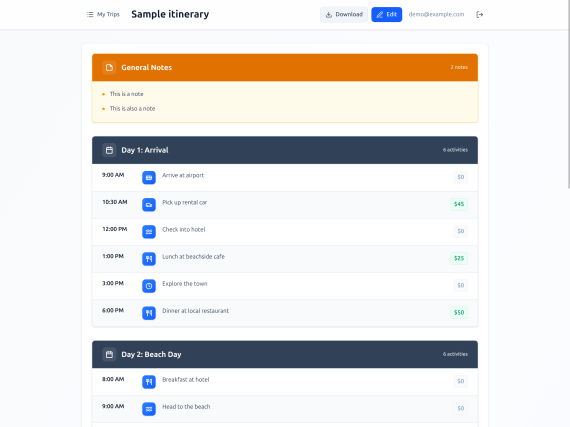

My reading appetite has been weak again this year, which I blame on two things: 1) Slay the Spire being way too good of a video game, and 2) starting a new job, and thus having more of my mental energy focused on that.

But I did manage to read some stuff! So without further ado, here are the book reviews:

Quick links

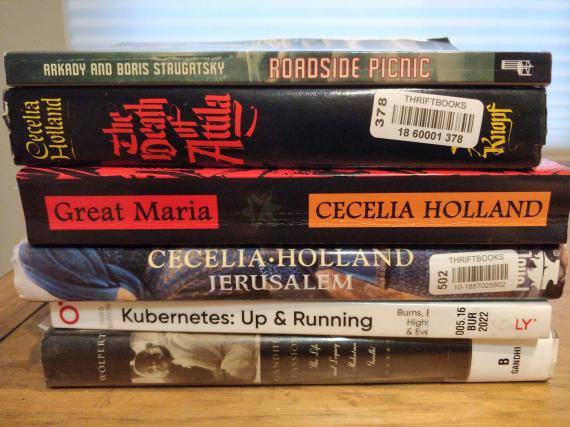

- Great Maria by Cecilia Holland

- Antichrist: A Novel Of The Emperor Frederick II by Cecilia Holland

- Jerusalem by Cecilia Holland

- More Everything Forever: AI Overlords, Space Empires, and Silicon Valley’s Crusade to Control the Fate of Humanity by Adam Becker

- Meditations by Marcus Aurelius

- Roadside Picnic by Arkady and Boris Strugatsky

- Gandhi’s Passion: The Life and Legacy of Mahatma Gandhi by Stanley Wolpert

Great Maria by Cecilia Holland

Like last year, I read quite a few Cecilia Holland books. I still think she’s an extraordinary writer of historical fiction, and her ability to conjure up so many vivid worlds across so many different eras and cultures is remarkable.

This book, though, I had a really hard time getting into. It’s simply a lot slower-paced than her other books I’ve read, which focus on male characters and are more about swashbuckling action, war, etc. Maybe I’m just a simple-headed man, but I like that kind of stuff.

The main character here is (somewhat unusually for Holland) a woman, and it’s mostly about how she asserts control over her life despite the domineering men around her (who are in fact doing a lot of warring and swashbuckling, often off-screen).

The most interesting bit for me (as in Jerusalem below) is about the clash of cultures between east and west – in this case, Normans in Sicily (which is a thing I had no idea happened) colliding with Muslims in the same region (another thing I was ignorant of – blame my American education!).

My mom and sister absolutely adore this book, and I can’t say I wouldn’t recommend it, but it’s kind of a slow burn compared to Holland’s other books.

Antichrist: A Novel Of The Emperor Frederick II by Cecilia Holland

This is another great medieval tale, also featuring a clash of civilizations between Christian and Muslim, but featuring the larger-than-life character of Frederick II, who apparently had plenty of enemies on the Christian side and a lot of sympathy for the Muslim side. A polymath who reportedly spoke six languages (including Arabic and Greek), he spends much of the book trying to dream up a Crusade mostly for his own glory, while still being labeled a heretic and “antichrist” by the Pope. He’s a big bombastic character, full of contradictions, containing multitudes.

I knew very little about Frederick II before reading this book, so I really enjoyed the way Holland brought him to life. I’ll definitely never see the character the same way again when I decide to play as the Holy Roman Empire in Civ.

Jerusalem by Cecilia Holland

Perhaps unsurprisingly, since I said I love Holland’s more action-packed books focused on clashes of civilizations, this is perhaps my all-time favorite book of hers. I just find the Crusades fascinating overall (the apocalyptic mindset of the crusaders, the strangeness and surprising tolerance of the Muslims compared to their European counterparts, the religious fervor on both sides).

I knew very little about the “Crusader kingdoms” of the Middle Ages and was surprised to learn that there used to be a French-speaking king in Jerusalem. Just one of the many surprises and vivid details you get from Cecilia Holland’s work.

More Everything Forever: AI Overlords, Space Empires, and Silicon Valley’s Crusade to Control the Fate of Humanity by Adam Becker

I read enough tech journalism that most of this book didn’t surprise me, but I still enjoyed it. I mostly appreciated the realist perspective on how silly the idea is of terraforming Mars, or that we should forgo generosity for existing humans in favor of trillions of unborn theoretical humans (“longtermism”). I get the feeling that a lot of the tech elite have watched a little too much Star Trek and would benefit from rounding out their education with a bit more physics, ecology, and philosophy.

Meditations by Marcus Aurelius

…Which leads us to the next book I read. I’ve been getting more and more interested in religion and philosophy lately (Bart Ehrman‘s writings on Christianity started that), and I wanted to read one of the “founding” books of stoicism by the 2nd-century Roman emperor.

I admit I struggled to find much in this book that I could apply to my own life (at one point he says “avoid looking on your slaves with lust” – okay Marc, I’ll remember that), but it is always interesting to read primary documents and understand how the ancients actually thought. The other jarring thing is the contrast between his chill, thoughtful philosophy and the relentless warmongering of his actual emperorship. I guess like Frederick II, a lot of historical figures contained multitudes.

Roadside Picnic by Arkady and Boris Strugatsky

A few years ago I made an effort to read the greatest hits of sci-fi and dystopian fiction, and somehow I missed this one. It’s a short and really fun read, with lots of vivid characters and surprising twists. I would hate to spoil anything about it, but I’d say that if you enjoyed the Annihilation series, you’ll love this one.

Gandhi’s Passion: The Life and Legacy of Mahatma Gandhi by Stanley Wolpert

I haven’t finished this book yet, but I’ll optimistically add it to this year’s list. I happened to re-watch Richard Attenborough’s Gandhi this year, and I found myself riveted. Gandhi to me is more interesting as a religious or philosophical figure than a historical one, but I wanted to learn a bit more about his life since the film is just a summary (and has been accused of skipping important details and indulging in hagiography).

So far, the most interesting part for me is how much of Gandhi’s philosophy was informed by Christianity and Christian thinkers (the sermon on the mount was a huge inspiration for him) as well as his vegetarianism, which seems to have effectively been the start of his career in community organizing and advocacy (as he struggled to find meatless dishes in London). As a vegetarian myself, I find a lot of his perspective persuasive, although I doubt I could subject myself to the monk-like discipline that he tries to achieve.